Data Science has been a word on everyone’s tongue for quite some time now. According to some surveys, since 2012 there has been 650% increase in the potential of this domain in becoming the hottest profession to choose and work in.

So what is Data Science? This concept has become so broad that almost any work with data can refer to Data Science. Data processing, cleaning, visualization. If you build graphs in Excel on your company’s sales tables, you are already doing Data Science.

Nevertheless, it is customary to refer to Data Science as many data processing areas, such as data mining, machine learning, deep learning, and big data.

Data Science is described well using a Venn diagram:

In general, Data Science is really at the intersection of disciplines. You need both knowledge of mathematics and statistics, as well as programming skills, and, of course, domain knowledge. Data Science should primary solve business tasks.

And this leads to the need for soft skills. If you know and can do it all together, you can achieve impressive results and a corresponding 7 figure salary.

As you can see, the salaries for this profession get quit high.

Which is a first indication that it’s not that easy to become a Data Scientist – read on below to learn how to start.

But you don’t have to know everything to get started, or even get a job as a Junior Data Scientist. The amount of information to learn can be daunting, but fear not, it all starts with small steps.

Average Data Scientist salary based on Surveys Glassdoor is $113,000 per year. And the need for specialists only grows over time.

Steps to Become a Data Scientist – Entry Plan

Here is our plan for how to jump in the data science.

- Learning Python (a programming language)

- Study scientific disciplines

- Understand how to get the data

- Data analysis and visualization

- Machine learning and neural networks

- Practical application of skills

- Proof of qualifications

Learning Python

Why Python?

The most common programming languages in Data Science are R and Python. Let’s first compare their popularity according to the TIOBE index. Below are the graphs for Python and R.

Python has been gaining ground since 2014 and is now about 12%. The new R release in October 2020 has brought back interest in it, but it is quickly waning.

Advantages of Python

- Easy to learn, easy to write, easy to read

Python syntax is as easy to understand as possible for a beginner. It is concise and well readable; one of Python’s paradigms is that the code should be as readable as possible. The mandatory requirement of indentation also facilitates this.

- Cross-platform

Suitable for different platforms, you can write and run Python on Linux, Windows, macOS and even mobile platforms.

- Strong community

There are many conferences on Python where you can meet colleagues and learn about current issues and challenges facing Python programmers. It is only online conferences now, but it’s still useful.

In most common cases, Python already has ready-made libraries for many business tasks. You don’t have to reinvent the wheel; you can just use what you already have.

- OOP paradigm support

Allows you to write large projects and easily reuse legacy code.

- Versatility

If you learn Python, then you can not only do Data Science but also, for example, automate some routine tasks, perhaps do web development or program development. GIMP and Blender, for example, were written in Python.

How do you learn Python?

Learning a programming language can seem like a daunting task in and of itself. But here too you can make a step by step.

Syntax

It would help if you started with the syntax of the language. You can choose one of the paid or free courses on the platform Udemy, or pay for a subscription on one of the most popular learning platforms learnpythonthehardway. There is a great free course from the Python Institute with support from Cisco. By the way, when you take this course, you get a 50% discount coupon for the PCAP – Certified Associate in Python Programming certification exam. I also like the approach of JetBrains, you do not just learn the syntax, but immediately execute mini-projects that you have to save on GitHub. You learn Python and work with version control systems, which can help you in real work. Of course, at any level, you shouldn’t forget about the official Python website, where you can get much good documentation. By the way, reading documentation is one of the skills worth developing to get into Data Science. And this can help you as a beginner and also as an experienced developer.

Mini projects

After learning the essential basics of the language, you should immediately start some completed mini-projects. You can find many ideas for Python project ideas for beginners.

Here are a few examples of projects for beginners:

- Dice rolling simulator

- Hangman game

- Snake game

- Caesar cypher encoder-decoder

- Tic-tac-toe

Google Colab will be handy at this stage. It is a free online notebook with code execution. You can take training notes and immediately write small snippets of code that you can execute. Below is an example from the welcome notebook of the service.

Documentation and Testing

Get in the habit of writing documented code right from the start. You write code once but read it a lot. Take care of who will read your code, perhaps it will be you, but in a few years, when you have already forgotten everything about this project. Write comments in the code, they should not be redundant, but still, if a question may arise somewhere, it is worth writing a comment there. Your functions will not be complicated in the first stages, but still, write docstrings to them. For elementary functions, these will be one-liners docstrings, for more complex ones, it is worth specifying what this function takes as arguments, what it does and what it returns. If there are any more complex parameters, you should definitely describe their possible options and how they affect the function’s performance. You should also pay attention to the testing of functions. The standard library doctest allows you to test code examples from the docstring function.

Before starting development, you need to understand what the function will do and what to return. And you should write tests for a function before starting to implement the function itself. First, we write tests, which, of course, will fail, and then we write the function itself and implement it so that the tests begin to pass. This approach is called test-driven development.

Algorithms and Data Structures

Next, dive into algorithms and data structures. It seems to many that this is redundant and not useful. Still, suppose you are engaged in algorithms. In that case, you acquire a certain mindset that allows you to better understand the tasks and deal with their decomposition and decompose them into elementary blocks. This skill is extremely useful for data processing. You can find the right course on algorithms for free at Khan Academy. When studying an algorithm, it is not enough to look at someone else’s code and understand it. To determine whether you really understood the code, you need to try to write this algorithm the next day without peeping into someone else’s code. If you could do this, you really understood the algorithm, if not, you should devote a little more time to studying. It would be best if you also did the same with any code that you find somewhere, for example on Stack Overflow. Do not copy-paste the code into your project. At least re-type it from the site, or better understand it enough so that you can write this code without looking at the source.

Object-Oriented Programming

It is worth learning at least the basics of object-oriented programming. In a sense, when learning Python, this is inevitable, because in Python absolutely everything is objects: all the functions, variables, data types. Learning OOP will allow you to understand exactly what you are doing when you call a particular method. And also, for example, write your own classes inheriting from the library ones. You can use this to create modified classes of various classifiers, for example, or to design neural networks.

Study scientific disciplines

You don’t have to study all of Python to start to study some math. One of the most daunting skills for newbies is linear algebra, calculus, probability theory, and mathematical statistics. You will need different knowledge from these scientific disciplines at different levels and positions, but you need to know.

- Matrices and operations on matrices

- Taking derivatives and integration

- Basics concepts of set

- Bases of combinatorics (combinations, permutations)

- Fundamentals of probability theory

- The central limit theorem

- Fundamentals of mathematical statistics

Start with matrices. This is the basis of the fundamentals; you will continuously operate with matrices in Data Science. Including with multidimensional matrices, which are difficult to imagine in your head. Such multidimensional matrices are called tensors. It is good to understand what a determinant, linear spaces and an orthogonal basis are.

In learning to work with matrices, pay attention to such a basic Python library for Data Science as numpy. It is ideal for working with matrices, including multidimensional ones. It contains the implementation of a large number of algorithms for processing matrices. All methods in numpy are well optimized and show excellent results in execution speed. You can start studying materials from the official site library.

At this stage, I advise you to turn your attention to the channel 3blue1brown. Grant Sanderson explains the basics of mathematics in simple language. First look at the playlists Essence of linear algebra and Essence of calculus.

You will need to understand what random events are, how to calculate their probabilities, what is the probability distribution. For example, watch the course High school statistics at Khan Academy. By the way, the theory of probability is also useful in everyday life. If you have studied at least the basics of probability theory, you most likely will not spend your money on the lottery tickets or in a casino.

If all this is too difficult for you, then do not despair. After all, modern libraries such as tensorflow, keras, and scikit take all the burden of calculations on themselves, but understanding what is inside is extremely useful and allows you to use these tools better.

Understand how to get the data

Part of the data you will receive will be in tables in various formats, such as CSV, Excel, JSON, and HDF. All these formats and many others allow you to load and process the Python library pandas. For Data Scientist it is necessary to study this library, you can start from the page 10 minutes to pandas on the official website or mini-courses on Kaggle

A lot of data that you will explore in real business problems will not be located in Excel tables, although this also happens in various databases (DB). The database is a systematized collection of various materials intended for processing using a computer. The database management system (DBMS) is used to manage the data in the DB.

There are many different DBs and DBMSs nowadays. But most of them support the structured query language (SQL). You should definitely learn at least the basics of this language. There are an excellent free course and online simulator.

It will also be useful to study getting data from various sites – web scraping. You will often need to do it to use Python libraries such as beautifulsoup, scrapy, selenium.

Data analysis and visualization

Data that you receive at the input can be heterogeneous, contain gaps and repetitions. Often you have to combine tables in various ways, transform, filter, and replace values. You can do all of this using the pandas library, and if you are going to work with big data, you should start learning about pyspark.

General principles of data processing and cleaning can be gleaned, for example, from this book. Pay special attention to Chapter 7 Data wrangling: Clean, Transform, Merge, Reshape. You need to learn data cleansing, what to do with gaps and repetitions, what to do with outliers, how to evaluate the quality of the data and its suitability for further processing. You also need to learn how to do various manipulations with data, highlighting a part of data, grouping, filtering, sorting, aggregating, and building pivot tables.

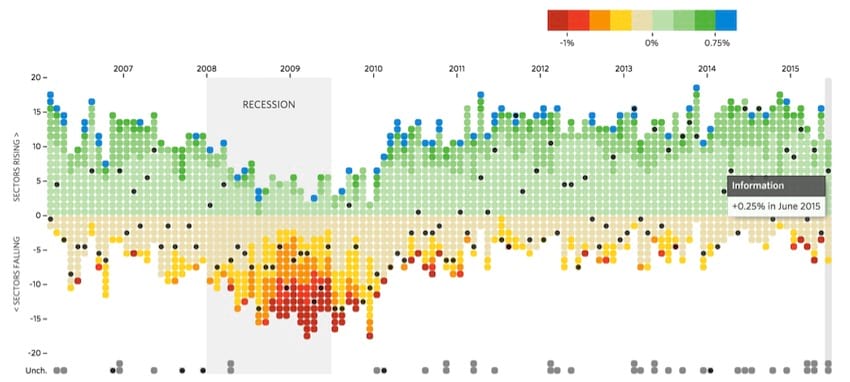

Data visualization allows you to literally see different patterns in your data. And in Data Science it is useful to build various visualizations. And different charts is vital for communication with business units. You should be able to plot the line charts, rectangle plots, scatter plots, histograms, heat maps, violin charts, and more. And also understand what they represent. Libraries are useful for visualizing data in Python matplotlib and seaborn.

Besides, there are several popular tools for building interactive charts and data presentations. The most popular are Tableau, PowerBI, and Qlikview. It is unnecessary to study them right away, but it is worth keeping in mind if you have free time.

Machine learning and neural networks

Classical machine learning is divided into 3 types. Let’s say a few words about each and give examples.

Machine learning

Supervised

The target value or label is already specified in our dataset. For example, in predicting real estate prices, we will have features of different real estate objects and these objects’ prices. And our model will try to predict the price of a new property only by its features, without specifying the price. In classification, the situation is the same, but we are trying to predict not some continuous value, but to determine to which group this or that object belongs.

- Regression

§ Score prediction

§ Weather forecasting

§ Estimating of different values

§ Market forecasting

- Classification

§ Fraud detection

§ Spam detection

§ Medical diagnostics

§ Image classification

Unsupervised

Data do not have labels in advance, and we do not know what exactly we are looking for, the model analyzes the presented sample and, based on some features, tries to divide the sample into groups. We cannot say in advance what kind of groups they will be, only a human after some additional analysis can give the names for the categories. In dimensionality reduction, we are trying to reduce the number of investigated features with the least loss of information to facilitate subsequent calculations.

- Dimensionality reduction

§ Meaningful compression

§ Face recognition

§ Data visualization

- Clustering

§ Recommender systems

§ Targeted marketing

§ Customer segmentation

Reinforcement

In reinforcement learning, the system receives signals from the external environment about whether it did the right thing or not. These signals allow accumulating experience of doing the right thing and acting further under this experience. For example, an AI that plays Mario focuses on the number of points and death of the game character to learn how to play. Choosing random actions first and remembering which ones lead to the best results.

§ Game AI

§ Autopilot

§ Real-time decisions

One of the most popular free machine learning courses is the Stanford University course on Coursera. Unfortunately, they use MatLab in the learning process, but the theoretical foundations and mathematics underlying various machine learning algorithms are considered in great detail and exhaustively.

At this stage, you will need a scikit-learn library for practice. It implements a lot of machine learning algorithms, from the simplest to the most complex. It is popular and easy to use. It is so easy that it can be tempting to use it without understanding what is going on; we do not advise falling into this trap.

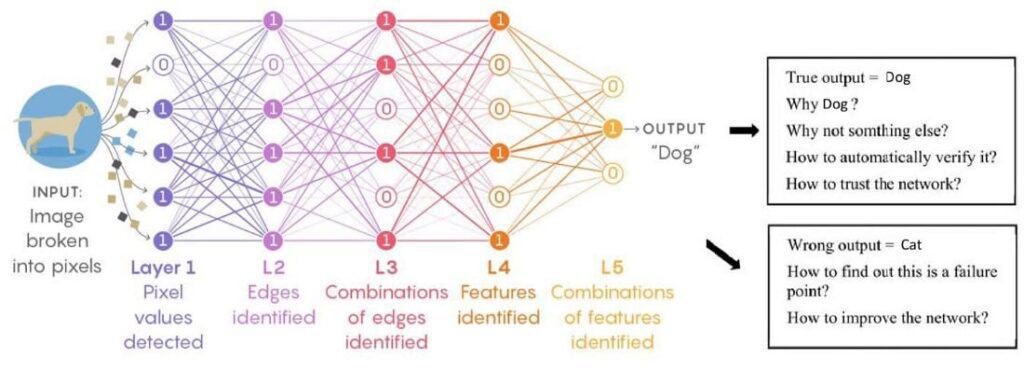

Neural networks and deep learning are the next stages after learning classic machine learning algorithms. You may not get to it. Many works do not need deep learning algorithms. To deal with it, you can use the classical machine learning methods. Neural networks are built on the principles of organizing biological neural networks, such as the human brain. Of course, modern neural networks are still far from the volume of the human brain. In today’s large networks, you are unlikely to find more than a thousand neurons, while the human brain contains 86 billion neurons.

A single network cell is elementary – it has several inputs and one output. The cell sums inputs with specific coefficients, and then the resulting sum is passed through the non-linear element. Nonlinearity must be present in a neural network; otherwise, it will be pointless to create neural networks with multiple layers. Neural network training consists of the automatic selection of the summation coefficients of the inputs of all neurons.

Coursera has a course Deep Learning Specialization, which will allow you to understand the basics of building neural networks in sufficient detail. Learn what neural networks are, how Shallow and Deep neural networks are built, and what hyperparameters are and how networks are trained and optimized.

In case you are going to study neural networks and deep learning, you will need a library tensorflow. Google developed this library for internal use, but in 2015 it was open for free access. It quickly won Data Scientists’ love around the world and pushed neural network technology to the masses.

Practical application of skills

As you learn, from the earliest stages, you will need to try to complete various projects, if you just read something and do not fix it later in real code, then it very quickly disappears from your head. We have already written that it is good to carry out various mini-projects in Python at the initial stages. Still, when you start studying libraries for machine learning, then it is worth taking up Kaggle. As written in the Kaggle Community Guidelines: “Kaggle’s community is made up of data scientists and machine learners from all over the world with a variety of skills and backgrounds.” There are many different competitions in this community, in which you can participate. Do not be afraid of the word “competition”; it is unnecessary to win and take first places at the beginning. For starters, you just need to try to do something. For many competitions, there are open notebooks of participants with solutions and advices. You can view them on the Notebooks tab. Take competition, for example, Titanic, which is recommended to try at first. This competition solves the classification problem. After the first deal, you probably won’t end up too high in the leaderboard. It’s time to explore the available open notebooks of participants. Look for different ways of processing and preparing data to build model and choose the parametrs and collect best practices in your notebook. Build your pipelines for analysis of different data.

Here are a few more competitions for beginners. We advise you to start with them, and when you gain confidence, you can take on other competitions, including those with cash prizes.

- Housing Prices Competition for Kaggle Learn Users – regression problem

- Digit Recognizer – computer vision

- Facial Keypoints Detection – image processing

- Bag of Words Meets Bags of Popcorn – natural language processing

- Predict Future Sales – time series analysis

Proof of qualifications

After studying everything required on how to become a Data Scientist and passing various competitions on Kaggle, you should look for confirmation of your qualifications. The winning competition on Kaggle will be a good one. For the won competitions on Kaggle, various ranks are assigned and starting from the Master level; there are no employment problems.

You can also upload your notebooks and pipelines to GitHub, having a repository on GitHub is a good indication of what you can actually do in Data Science. And putting the code on public display disciplines you to write comments and beautiful code.

How long will it take?

Looking at the entire amount of knowledge and skills you need to obtain, a natural question arises: how long will it take for all this? Of course, assessments can be individual, but it is worth understanding that entering a new profession is a long run. Depending on your initial skills and knowledge, this can take anywhere from six months to a year of hard work.

Conclusions

The Data Scientist profession is in demand and well paid, but to become a professional, you need to get a reasonably large amount of skills and knowledge. Such work is becoming even more relevant because it can be performed remotely without being in the office.

The sooner you start learning to become a , the faster you will come to success.

We advise you to start by learning the Python language, even if you realize that mathematics is not at all for you, you can continue to develop in Python development. Do practical mini-projects as early as possible in the learning process, document your code and communicate in a professional community.

All of this will help you achieve your goal as quickly as possible. The main thing is to take the first step. The walker will master the road.